A summary of the POODLE sslv3 vulnerability and attack:

A vulnerability has been discovered in a decrepit-but-still-widely-supported version of SSL, SSLv3, which allows an attacker a good chance at determining the true value of a single byte of encrypted traffic. This is of limited use in most applications, but in HTTPS (eg your web browser, many mobile applications, etc) an attacker in an MITM (Man-In-The-Middle) position, such as someone operating a wireless router you connect to, can capture and resend the traffic repeatedly until they manage to get a valuable chunk of it assembled in the clear. (This is done by manipulating cleartext traffic, to the same or any other site, injecting some Javascript in that traffic to get your browser to run it. The rogue JS function is what reloads the secure site, offscreen where you can’t see it happening, until the attacker gets what s/he needs out of it.)

That “valuable chunk” is the cookie that validates your user login on whatever secure website you happen to be browsing – your bank, webmail, ebay or amazon account, etc. By replaying that cookie, the attacker can now hijack your logged in session directly on his/her own device, and from there can do anything that you would be able to do – make purchases, transfer funds, change the password, change the associated email account, et cetera.

It reportedly takes 60 seconds or less for an attacker in a MITM position (again, typically someone in control of a router your traffic is being directed through, which is most often going to be a wireless router – maybe even one you don’t realize you’ve connected to) to replay traffic enough to capture the cookie using this attack.

Worth noting: SSLv3 is hopelessly obsolete, but it’s still widely supported in part because IE6/Windows XP need it, and so many large enterprises STILL are using IE6. Many sites and servers have proactively disabled SSLv3 for quite some time already, and for those, you’re fine. However, many large sites still have not – a particularly egregious example being Citibank, to whom you can still connect with SSLv3 today. As long as both your client application (web browser) and the remote site (web server) both support SSLv3, a MITM can force a downgrade dance, telling each side that the OTHER side only supports SSLv3, forcing that protocol even though it’s strongly deprecated.

I’m an end user – what do I do?

Disable SSLv3 in your browser. If you use IE, there’s a checkbox in Internet Options you can uncheck to remove SSLv3 support. If you use Firefox, there’s a plugin for that. If you use Chrome, you can start Chrome with a command-line option that disables SSLv3 for now, but that’s kind of a crappy “fix”, since you’d have to make sure to start Chrome either from the command line or from a particular shortcut every time (and, for example, clicking a link in an email that started up a new Chrome instance would fail to do so).

Instructions, with screenshots, are available at https://zmap.io/sslv3/ and I won’t try to recreate them here; they did a great job.

I will note specifically here that there’s a fix for Chrome users on Ubuntu that does fairly trivially mitigate even use-cases like clicking a link in an email with the browser not already open:

* Open /usr/share/applications/google-chrome.desktop in a text editor

* For any line that begins with "Exec", add the argument --ssl-version-min=tls1

* For instance the line "Exec=/usr/bin/google-chrome-stable %U" should become "Exec=/usr/bin/google-chrome-stable --ssl-version-min=tls1 %U

You can test to see if your fix for a given browser worked by visiting https://zmap.io/sslv3/ again afterwards – there’s a banner at the top of the page which will warn you if you’re vulnerable. WARNING, caching is enabled on that page, meaning you will have to force-refresh to make certain that you aren’t seeing the old cached version with the banner intact – on most systems, pressing ctrl-F5 in your browser while on the page will do the trick.

I’m a sysadmin – what do I do?

Disable SSLv3 support in any SSL-enabled service you run – Apache, nginx, postfix, dovecot, etc. Worth noting – there is currently no known way to usefully exploit the POODLE vulnerability with IMAPS or SMTPS or any other arbitrary SSL-wrapped protocol; currently HTTPS is the only known protocol that allows you to manipulate traffic in a useful enough way. I would not advise banking on that, though. Disable this puppy wherever possible.

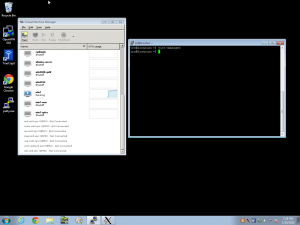

The simplest way to test if a service is vulnerable (at least, from a real computer – Windows-only admins will need to do some more digging):

openssl s_client -connect mail.jrs-s.net:443 -ssl3

The above snippet would check my mailserver. The correct (sslv3 not available) response begins with a couple of error lines:

CONNECTED(00000003)

140301802776224:error:14094410:SSL routines:SSL3_READ_BYTES:sslv3 alert handshake failure:s3_pkt.c:1260:SSL alert number 40

140301802776224:error:1409E0E5:SSL routines:SSL3_WRITE_BYTES:ssl handshake failure:s3_pkt.c:596:

What you DON’T want to see is a return with a certificate chain in it:

CONNECTED(00000003)

depth=1 C = GB, ST = Greater Manchester, L = Salford, O = COMODO CA Limited, CN = PositiveSSL CA 2

verify error:num=20:unable to get local issuer certificate

verify return:0

---

Certificate chain

0 s:/OU=Domain Control Validated/OU=PositiveSSL/CN=mail.jrs-s.net

i:/C=GB/ST=Greater Manchester/L=Salford/O=COMODO CA Limited/CN=PositiveSSL CA 2

1 s:/C=GB/ST=Greater Manchester/L=Salford/O=COMODO CA Limited/CN=PositiveSSL CA 2

i:/C=SE/O=AddTrust AB/OU=AddTrust External TTP Network/CN=AddTrust External CA Root

On Apache on Ubuntu, you can edit /etc/apache2/mods-available/ssl.conf and find the SSLProtocol line and change it to the following:

SSLProtocol all -SSLv2 -SSLv3

Then restart Apache with /etc/init.d/apache2 restart, and you’re golden.

I haven’t had time to research Postfix or Dovecot yet, which are my other two big concerns (even though they theoretically shouldn’t be vulnerable since there’s no way for the attacker to manipulate SMTPS or IMAPS clients into replaying traffic repeatedly).

Possibly also worth noting – I can’t think of any way for an attacker to exploit POODLE without access to web traffic running both in the clear and in a Javascript-enabled browser, so if you wanted to disable Javascript completely (which is pretty useless since it would break the vast majority of the web) or if you’re using a command-line tool like wget for something, it should be safe.