Continuing this week’s “making an article so I don’t have to keep typing it” ZFS series… here’s why you should stop using RAIDZ, and start using mirror vdevs instead.

The basics of pool topology

A pool is a collection of vdevs. Vdevs can be any of the following (and more, but we’re keeping this relatively simple):

- single disks (think RAID0)

- redundant vdevs (aka mirrors – think RAID1)

- parity vdevs (aka stripes – think RAID5/RAID6/RAID7, aka single, dual, and triple parity stripes)

The pool itself will distribute writes among the vdevs inside it on a relatively even basis. However, this is not a “stripe” like you see in RAID10 – it’s just distribution of writes. If you make a RAID10 out of 2 2TB drives and 2 1TB drives, the second TB on the bigger drives is wasted, and your after-redundancy storage is still only 2 TB. If you put the same drives in a zpool as two mirror vdevs, they will be a 2x2TB mirror and a 2x1TB mirror, and your after-redundancy storage will be 3TB. If you keep writing to the pool until you fill it, you may completely fill the two 1TB disks long before the two 2TB disks are full. Exactly how the writes are distributed isn’t guaranteed by the specification, only that they will be distributed.

What if you have twelve disks, and you configure them as two RAIDZ2 (dual parity stripe) vdevs of six disks each? Well, your pool will consist of two RAIDZ2 arrays, and it will distribute writes across them just like it did with the pool of mirrors. What if you made a ten disk RAIDZ2, and a two disk mirror? Again, they go in the pool, the pool distributes writes across them. In general, you should probably expect a pool’s performance to exhibit the worst characteristics of each vdev inside it. In practice, there’s no guarantee where reads will come from inside the pool – they’ll come from “whatever vdev they were written to”, and the pool gets to write to whichever vdevs it wants to for any given block(s).

Storage Efficiency

If it isn’t clear from the name, storage efficiency is the ratio of usable storage capacity (after redundancy or parity) to raw storage capacity (before redundancy or parity).

This is where a lot of people get themselves into trouble. “Well obviously I want the most usable TB possible out of the disks I have, right?” Probably not. That big number might look sexy, but it’s liable to get you into a lot of trouble later. We’ll cover that further in the next section; for now, let’s just look at the storage efficiency of each vdev type.

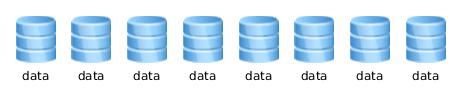

- single disk vdev(s) – 100% storage efficiency. Until you lose any single disk, and it becomes 0% storage efficency…

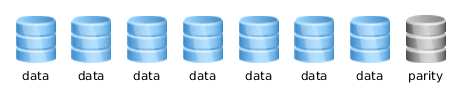

eight single-disk vdevs - RAIDZ1 vdev(s) – (n-1)/n, where n is the number of disks in each vdev. For example, a RAIDZ1 of eight disks has an SE of 7/8 = 87.5%.

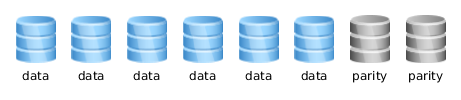

an eight disk raidz1 vdev - RAIDZ2 vdev(s) – (n-2)/n. For example, a RAIDZ2 of eight disks has an SE of 6/8 = 75%.

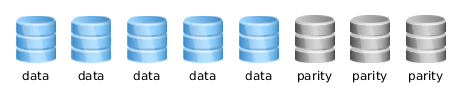

an eight disk raidz2 vdev - RAIDZ3 vdev(s) – (n-3)/n. For example, a RAIDZ3 of eight disks has an SE of 5/8 = 62.5%.

an eight disk raidz3 vdev - mirror vdev(s) – 1/n, where n is the number of disks in each vdev. Eight disks set up as 4 2-disk mirror vdevs have an SE of 1/2 = 50%.

a pool of four 2-disk mirror vdevs

One final note: striped (RAIDZ) vdevs aren’t supposed to be “as big as you can possibly make them.” Experts are cagey about actually giving concrete recommendations about stripe width (the number of devices in a striped vdev), but they invariably recommend making them “not too wide.” If you consider yourself an expert, make your own expert decision about this. If you don’t consider yourself an expert, and you want more concrete general rule-of-thumb advice: no more than eight disks per vdev.

Fault tolerance / degraded performance

Be careful here. Keep in mind that if any single vdev fails, the entire pool fails with it. There is no fault tolerance at the pool level, only at the individual vdev level! So if you create a pool with single disk vdevs, any failure will bring the whole pool down.

It may be tempting to go for that big storage number and use RAIDZ1… but it’s just not enough. If a disk fails, the performance of your pool will be drastically degraded while you’re replacing it. And you have no fault tolerance at all until the disk has been replaced and completely resilvered… which could take days or even weeks, depending on the performance of your disks, the load your actual use places on the disks, etc. And if one of your disks failed, and age was a factor… you’re going to be sweating bullets wondering if another will fail before your resilver completes. And then you’ll have to go through the whole thing again every time you replace a disk. This sucks. Don’t do it. Conventional RAID5 is strongly deprecated for exactly the same reasons. According to Dell, “Raid 5 for all business critical data on any drive type [is] no longer best practice.”

RAIDZ2 and RAIDZ3 try to address this nightmare scenario by expanding to dual and triple parity, respectively. This means that a RAIDZ2 vdev can survive two drive failures, and a RAIDZ3 vdev can survive three. Problem solved, right? Well, problem mitigated – but the degraded performance and resilver time is even worse than a RAIDZ1, because the parity calculations are considerably gnarlier. And it gets worse the wider your stripe (number of disks in the vdev).

Saving the best for last: mirror vdevs. When a disk fails in a mirror vdev, your pool is minimally impacted – nothing needs to be rebuilt from parity, you just have one less device to distribute reads from. When you replace and resilver a disk in a mirror vdev, your pool is again minimally impacted – you’re doing simple reads from the remaining member of the vdev, and simple writes to the new member of the vdev. In no case are you re-writing entire stripes, all other vdevs in the pool are completely unaffected, etc. Mirror vdev resilvering goes really quickly, with very little impact on the performance of the pool. Resilience to multiple failure is very strong, though requires some calculation – your chance of surviving a disk failure is 1-(f/(n-f)), where f is the number of disks already failed, and n is the number of disks in the full pool. In an eight disk pool, this means 100% survival of the first disk failure, 85.7% survival of a second disk failure, 66.7% survival of a third disk failure. This assumes two disk vdevs, of course – three disk mirrors are even more resilient.

But wait, why would I want to trade guaranteed two disk failure in RAIDZ2 with only 85.7% survival of two disk failure in a pool of mirrors? Because of the drastically shorter time to resilver, and drastically lower load placed on the pool while doing so. The only disk more heavily loaded than usual during a mirror vdev resilvering is the other disk in the vdev – which might sound bad, but remember that it’s no more heavily loaded than it would’ve been as a RAIDZ member. Each block resilvered on a RAIDZ vdev requires a block to be read from each surviving RAIDZ member; each block written to a resilvering mirror only requires one block to be read from a surviving vdev member. For a six-disk RAIDZ1 vs a six disk pool of mirrors, that’s five times the extra I/O demands required of the surviving disks.

Resilvering a mirror is much less stressful than resilvering a RAIDZ.

One last note on fault tolerance

No matter what your ZFS pool topology looks like, you still need regular backup.

Say it again with me: I must back up my pool!

ZFS is awesome. Combining checksumming and parity/redundancy is awesome. But there are still lots of potential ways for your data to die, and you still need to back up your pool. Period. PERIOD!

Normal performance

It’s easy to think that a gigantic RAIDZ vdev would outperform a pool of mirror vdevs, for the same reason it’s got a greater storage efficiency. “Well when I read or write the data, it comes off of / goes onto more drives at once, so it’s got to be faster!” Sorry, doesn’t work that way. You might see results that look kinda like that if you’re doing a single read or write of a lot of data at once while absolutely no other activity is going on, if the RAIDZ is completely unfragmented… but the moment you start throwing in other simultaneous reads or writes, fragmentation on the vdev, etc then you start looking for random access IOPS. But don’t listen to me, listen to one of the core ZFS developers, Matthew Ahrens: “For best performance on random IOPS, use a small number of disks in each RAID-Z group. E.g, 3-wide RAIDZ1, 6-wide RAIDZ2, or 9-wide RAIDZ3 (all of which use ⅓ of total storage for parity, in the ideal case of using large blocks). This is because RAID-Z spreads each logical block across all the devices (similar to RAID-3, in contrast with RAID-4/5/6). For even better performance, consider using mirroring.“

Please read that last bit extra hard: For even better performance, consider using mirroring. He’s not kidding. Just like RAID10 has long been acknowledged the best performing conventional RAID topology, a pool of mirror vdevs is by far the best performing ZFS topology.

Future expansion

This is one that should strike near and dear to your heart if you’re a SOHO admin or a hobbyist. One of the things about ZFS that everybody knows to complain about is that you can’t expand RAIDZ. Once you create it, it’s created, and you’re stuck with it.

Well, sorta.

Let’s say you had a server with 12 slots to put drives in, and you put six drives in it as a RAIDZ2. When you bought it, 1TB drives were a great bang for the buck, so that’s what you used. You’ve got 6TB raw / 4TB usable. Two years later, 2TB drives are cheap, and you’re feeling cramped. So you fill the rest of the six available bays in your server, and now you’ve added an 12TB raw / 8TB usable vdev, for a total pool size of 18TB/12TB. Two years after that, 4TB drives are out, and you’re feeling cramped again… but you’ve got no place left to put drives. Now what?

Well, you actually can upgrade that original RAIDZ2 of 1TB drives – what you have to do is fail one disk out of the vdev and remove it, then replace it with one of your 4TB drives. Wait for the resilvering to complete, then fail a second one, and replace it. Lather, rinse, repeat until you’ve replaced all six drives, and resilvered the vdev six separate times – and after the sixth and last resilvering finishes, you have a 24TB raw / 16TB usable vdev in place of the original 6TB/4TB one. Question is, how long did it take to do all that resilvering? Well, if that 6TB raw vdev was nearly full, it’s not unreasonable to expect each resilvering to take twelve to sixteen hours… even if you’re doing absolutely nothing else with the system. The more you’re trying to actually do in the meantime, the slower the resilvering goes. You might manage to get six resilvers done in six full days, replacing one disk per day. But it might take twice that long or worse, depending on how willing to hover over the system you are, and how heavily loaded it is in the meantime.

What if you’d used mirror vdevs? Well, to start with, your original six drives would have given you 6TB raw / 3TB usable. So you did give up a terabyte there. But maybe you didn’t do such a big upgrade the first time you expanded. Maybe since you only needed to put in two more disks to get more storage, you only bought two 2TB drives, and by the time you were feeling cramped again the 4TB disks were available – and you still had four bays free. Eventually, though, you crammed the box full, and now you’re in that same position of wanting to upgrade those old tiny 1TB disks. You do it the same way – you replace, resilver, replace, resilver – but this time, you see the new space after only two resilvers. And each resilvering happens tremendously faster – it’s not unreasonable to expect nearly-full 1TB mirror vdevs to resilver in three or four hours. So you can probably upgrade an entire vdev in a single day, even without having to hover over the machine too crazily. The performance on the machine is hardly impacted during the resilver. And you see the new capacity after every two disks replaced, not every six.

TL;DR

Too many words, mister sysadmin. What’s all this boil down to?

- don’t be greedy. 50% storage efficiency is plenty.

- for a given number of disks, a pool of mirrors will significantly outperform a RAIDZ stripe.

- a degraded pool of mirrors will severely outperform a degraded RAIDZ stripe.

- a degraded pool of mirrors will rebuild tremendously faster than a degraded RAIDZ stripe.

- a pool of mirrors is easier to manage, maintain, live with, and upgrade than a RAIDZ stripe.

- BACK. UP. YOUR POOL. REGULARLY. TAKE THIS SERIOUSLY.

TL;DR to the TL;DR – unless you are really freaking sure you know what you’re doing… use mirrors. (And if you are really, really sure what you’re doing, you’ll probably change your mind after a few years and wish you’d done it this way to begin with.)

Well said Jim, just the info I was looking for before I invest into ZFS. Your post at r/DataHoarder brought me over, and I see that Allan Jude is here too. Frosty tech mojo, thanks. Now I got to see about LTO-6/LTFS options.

Great post, I was thinking of migrating my 4x3TB mirror vdevs over to RAIDZ2 when I got around to purchasing a couple more drives, but you’ve reaffirmed my original choice of mirrors over Z. Can you weigh in on your preferred method of backing up a pool? Mine does not have a backup (I know, I know) and I’m using a little over 4TB of my 5.2TB capacity. I’m using FreeNAS and there are a number of options to choose. It’s all media so it isn’t necessarily “critical” but it would be nice.

http://sanoid.net/ – you don’t necessarily need to implement the entire sanoid platform, the syncoid tool will work with any dataset.

Currently doesn’t have a recursive feature (you need to replicate each dataset individually, so if your pool had 20 datasets, you’d have to use 20 commands) but I’m planning on implementing recursion Real Soon Now ™. If you’re impatient, you can always do something like:root@yourbox:~# for dataset in `zfs list -Hr poolname | awk print $1`> do

> syncoid $dataset root@remotehost:poolname/$dataset

> done

(Strike that, I implemented recursive synchronization a while back. ^_^ )

You might just want to use FreeNAS built-in replication, then. Set up another FreeNAS box – the drives and topology can be wildly different, it doesn’t matter – and set up replication from one to the other. Whether you use syncoid from the CLI or use FreeNAS replication from its GUI, ultimately you’re just using an easy-mode wrapper for native ZFS replication either way.

Forgive me if my question is ignorant, till new to FreeNAS and ZFS. I understand the performance gain that can be had by multiple mirrored vdevs, but this presumes a fairly robust ability to quickly back up the entire zpool if you cannot replace that drive fast enough. If you lose even a single mirrored vdev you lose the entire pool.

When performance is less of a concern, but local survivability is, would RADZ2 still not present a better solution? And of course, off-site backups are always necessary, regardless.

Thanks!

I’m not sure I see what you’re getting at. I was pretty clear in the article: rebuilds are tremendously faster on a pool of mirrors, so the actual exposure window tends to be lower, particularly considering the way that drives frequently fail in rapid succession. If you’re using a pool of fairly narrow raidz2 vdevs, the rebuild time is close enough that you could make an argument for raidz2 being safer – but then, if you’re using a pool of fairly small raidz2 vdevs, you aren’t really who I was trying to reach in the first place. I’m trying to get the attention of the people who are literally cramming every drive they can find into one vdev.

IMO, no. However if you’re looking at a fairly large pool – say, 24 disks – the difference in storage efficiency between four 6-disk raidz2 vdevs (67%) and 12 2-disk mirror vdevs (50%) might be worth it. Depends on your future plans for expandability, your need for performance, etc. (In general, though, I’d generally argue that if the difference between 67% and 50% is make or break for you, you’re probably architecting your storage too close to the wire in the first place.)

Actually with ZFS resilvers are slow and painful regardless which disk config you go with. One may be slightly quicker than another but overall all are within the same order of magnitude of slowness… Ditto for large deletes.

I’ve read through your article a few times and I may be missing something but I can’t get the math to add up.

I’ll talk speed later and cover redundancy for now.

Your grasp of statistics (and topology) may be a little less solid than you think. You’re contrasting RAID10 and a pool of mirrors, but in terms of both storage efficiency and resiliency they’re identical. (The pool of mirrors is higher IOPS performance, but that’s another topic entirely.)

40% isn’t the best bet in the world, but it’s one hell of a lot better than playing the lotto. Add in the lower exposure to multiple failures due to the faster rebuilds, and… well, I already said it all in the article.

That’s because you’re not struggling for IOPS. As long as you’re looking for relatively lightly loaded relatively large block performance, a striped array will do fine. Get down into the “platter chatter” territory – in the most extreme case, 4K random I/O – and you’ll discover you’re in an entirely different world.

You realize an order of magnitude is literally a factor of 10, right? As in, the difference between a 1 day rebuild and a 10 day rebuild?

In the real world, you’re usually going to look at rebuilds taking anywhere from 1.5x to 3x as long on a single RAIDZ vdev as they would take on the same disks in a pool of mirrors. It can vary somewhat, but that’s basically the range you’ll probably be looking at.

This posting is far superior to the official recommendations from Solaris. 🙂

http://www.solarisinternals.com/wiki/index.php/ZFS_Best_Practices_Guide#Should_I_Configure_a_RAIDZ.2C_RAIDZ-2.2C_RAIDZ-3.2C_or_a_Mirrored_Storage_Pool.3F

and,

http://www.solarisinternals.com/wiki/index.php/ZFS_Best_Practices_Guide#Mirrored_Configuration_Recommendations

Nice article, Jim.

Maybe to ‘calm’ the paranoia about multiple disk failure you could add a word or two about hot-spares…?

Interesting article. My server is limited to maximum 5 discs. What ZFS configuration do you recommend for that? Currently, i have a raidz1 running with 3 discs, however, i am interested in adding two disks and using raidz2.

However, now i thought about another interesting option. I use four of the disks in a mirror vdev and the fifth one for backup of the most important files. This is for home usage and high performance is not the most important matter.

Another consideration is how the new pool will be rebuilt. Any thoughts?

Add two new disks, create a separate pool using the two new disks as a mirror vdev. Migrate all your data to the new pool with the single mirror vdev. Destroy your old pool, freeing those three disks. Add two of them as another mirror vdev to the new pool. Do, well, whatever with the fifth one – a single-disk pool to hold backups is fine.

Alternate answer – most of the caveats about raidz don’t really apply all that much to a three-disk RAIDZ1. Yes, it’s only a single disk of parity… but it’s also only across three total disks. So you could also just choose to add the two new disks as a mirror vdev to your existing pool, which would then have your current three-disk raidz1 vdev and one two-disk mirror vdev. It’s a bit of a mutt, but that shouldn’t really matter for home use. And you wouldn’t have to worry about migrating data, just add the new disks and you’re done.

That said – you should absolutely be backing up. So if you don’t already have a backup plan, put one together. And use it.

Thanks for your thoughts. I forgot to mention that i already have backup plans. I don’t know when i have time to fix this, but i will put o comment of the success.

Completely agree with this, after initially building my pool as a 3x4TB RAIDZ1 I’ve realised about a year later that I really should have grabbed another 4TB drive and used those mirrors.

Realistically I’m only using about 5.8TB of the 7TB I’ve got available and it’s a pain now having to consider upgrades as I’ll likely have to either build an entire other redundant array to rebuild the pool or just suck it up and lose some of my older backups.

Eventually likely will move to a few sets of 2x4TB vdevs for easier upgrade, especially since the 8TB IronWolfs are getting a little more affordable

Good write up, Jim! Now I know what strategy I’ll be using for my ZFS Servers.

Excellent article, I mistaken used RAID-Z1 in my pool on my personal file server and I’ve regretted it more than once. I just bought new drives and I’m building a new pool with mirrored Vdevs and migrating the old pool’s drives (newest first) as mirrors in the new pool. I no longer have to buy six drives at a time to upgrade pool capacity and no more walking on eggshells past the server while it takes as long as 48 hours to resilver.

Good article which unfortunately doesn’t cover the ability of ZFS to define n-way mirror vdevs.

seen some 7 years later:

I had planned to buy 7 12 tb drives for some raidz, but seeing this makes me plan for 2 2x18TB mirrors instead. They fit in my old box, sort of and it seems to be cheap just adding 5 extra sata plugs on an extension board, so there is room for one of these hot spares.

I dare now to buy cheaper drives, and replacing them seems not too hard.

You changed my mind – which is good since I am now moving for a simpler setup.

Uh, I wish I had come across this article earlier. Instead I consulted a company to design our solution – but I’m not sure, they actually delivered the best.

Currently I run a wide pool of 20x 14TB disks as raidz2, 6.4TB NMVe card as SLOG+L2Arc (2:4), 256GB RAM. But write speeds are way worse than I expected. Our standard workload is async, big files of 150-250mb, a lot of files (single projects usually have 1-10TB). I’ve disabled atime, set recordsize to 1m, enabled compression, xattr=sa. But still my fio tests are just sad 🙁

I need about 250TB of storage available, we expect 50TB rolling each month (although those numbers are quite uncertain; they were never tracked before and the new system is still in testing). My first migration of all the old data is done and I’d prefer not to do it all again (just delta when going live), but if I need to rebuild the pool setup, I will go for it.

Perhabs someone can give me a quick hint?

“This is one that should strike near and dear to your heart if you’re a SOHO admin or a hobbyist. One of the things about ZFS that everybody knows to complain about is that you can’t expand RAIDZ. Once you create it, it’s created, and you’re stuck with it.”

For future reference :

OpenZFS supports raidz expansion since zfs 2.2.2 ( https://github.com/openzfs/zfs/pull/15022 )

Given the new OpenZFS RaidZ Expansion of single drives to an existing vdev (https://www.youtube.com/watch?v=tqyNHyq0LYM, https://github.com/openzfs/zfs/discussions/15232), does this at all change your opinion on almost never using RAIDZ, mostly vdev mirroring? Thanks.

Thank you so much for this view!

I have used two different QNAP 2-bay NAS the last years, but now i got out of space, so i was looking around if i was doing some sort of raid or keep the mirror that has never failed me for 15 years.

I have just setup ZFS with mirror vdevs, and just wait to add the two last 8 TB drives! And life is so easy!

Not really. It does remove one of the more minor complaints with RAIDz, but RAIDz expansion isn’t exactly a panacea–for example, if you expand a 6-wide RAIDz2 to 10-wide, your existing data will still be written entirely in six-wide stripes, despite it being distributed around a ten-wide Z2.

Taken to the extreme–which is where **a lot** of hobbyists will take that–this means that a 95% full 6-wide Z2 expanded to 7-wide will still have 81.4% of its raw capacity storing data on 6-wide stripes, with a naive SE of 67%… and the remaining 19.6% of the “upgraded” pool’s raw capacity will have a naive SE of 71.4%, but with the padding necessary on those 7-wide stripes, the *real* storage efficiency will actually be *worse* than the original six-wide Z2’s was.

What if you expand several drives at once, maintaining an ideal width, and avoiding the need for padding? Well, you expand that 6-wide Z2 to 10-wide, and now you’ve got 57% of its raw capacity still written in 6-wide stripes with a naive SE of 67%, and the remaining 43% can then be written with a naive SE of 80%. In addition to the performance implications, this means that (if we assume 10TB disks) instead of your expanded 10-wide Z2 having an after-parity capacity of 80TB, it instead has an after-parity capacity of only 72.6TB.

None of this is simple, or ideal, nor does it match the expectations most folks who haven’t put much thought into this will have. So I’m still stanning mirrors pretty hard!

However, I do think that the new RAIDz expansion code offers a very interesting possibility by example–if we instead can tell ZFS “no matter how many drives I put in, I want you to write the stripes at a maximum of six wide” you CAN have fairly easy, safe expansion without a lot of frustrating complexity. This is still mostly going to appeal to hobbyists, IMO, because serious storage admins will be planning in terms of multiple vdevs, not one single vdev–and if you’re planning on multiple vdevs in the first place, you’re right back to the way pool expansion was initially planned in ZFS–by adding entire new vdevs to a pool, not by mucking about inside individual vdevs. 🙂