If you’re new here:

Sanoid is an open-source storage management project, built on top of the OpenZFS filesystem and Linux KVM hypervisor, with the aim of providing affordable, open source, enterprise-class hyperconverged infrastructure. Most of what we’re talking about today boils down to “managing ZFS storage” – although Sanoid’s replication management tool Syncoid does make the operation a lot less complicated.

Recently, I deployed two Sanoid appliances to a new customer in Raleigh, NC.

When the customer specced out their appliances, their plan was to deploy one production server and one offsite DR server – and they wanted to save a little money, so the servers were built out differently. Production had two SSDs and six conventional disks, but offsite DR just had eight conventional disks – not like DR needs a lot of IOPS performance, right?

Well, not so right. When I got onsite, I discovered that the “disaster recovery” site was actually a working space, with a mission critical server in it, backed up only by a USB external disk. So we changed the plan: instead of a production server and an offsite DR server, we now had two production servers, each of which replicated to the other for its offsite DR. This was a big perk for the customer, because the lower-specced “DR” appliance still handily outperformed their original server, as well as providing ZFS and Sanoid’s benefits of rolling snapshots, offsite replication, high data integrity, and so forth.

But it still bothered me that we didn’t have solid state in the second suite.

The main suite had two pools – one solid state, for boot disks and database instances, and one rust, for bulk storage (now including backups of this suite). Yes, our second suite was performing better now than it had been on their original, non-Sanoid server… but they had a MySQL instance that tended to be noticeably slow on inserts, and the desire to put that MySQL instance on solid state was just making me itch. Problem is, the client was 250 miles away, and their Sanoid Standard appliance was full – eight hot-swap bays, each of which already had a disk in it. No more room at the inn!

We needed minimal downtime, and we also needed minimal on-site time for me.

You can’t remove a vdev from an existing pool, so we couldn’t just drop the existing four-mirror pool to a three-mirror pool. So what do you do? We could have stuffed the new pair of SSDs somewhere inside the case, but I really didn’t want to give up the convenience of externally accessible hot swap bays.

So what do you do?

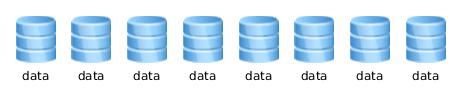

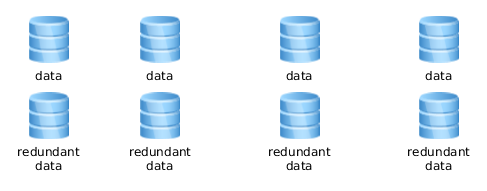

In this case, what you do – after discussing all the pros and cons with the client decision makers, of course – is you break some vdevs. Our existing pool had four mirrors, like this:

NAME STATE READ WRITE CKSUM

data ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

wwn-0x50014ee20b8b7ba0-part3 ONLINE 0 0 0

wwn-0x50014ee20be7deb4-part3 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

wwn-0x50014ee261102579-part3 ONLINE 0 0 0

wwn-0x50014ee2613cc470-part3 ONLINE 0 0 0

mirror-2 ONLINE 0 0 0

wwn-0x50014ee2613cfdf8-part3 ONLINE 0 0 0

wwn-0x50014ee2b66693b9-part3 ONLINE 0 0 0

mirror-3 ONLINE 0 0 0

wwn-0x50014ee20b9b4e0d-part3 ONLINE 0 0 0

wwn-0x50014ee2610ffa17-part3 ONLINE 0 0 0

Each of those mirrors can be broken, freeing up one disk – at the expense of removing redundancy on that mirror, of course. At first, I thought I’d break all the mirrors, create a two-mirror pool, migrate the data, then destroy the old pool and add one more mirror to the new pool. And that would have worked – but it would have left the data unbalanced, so that the majority of reads would only hit two of my three mirrors. I decided to go for the cleanest result possible – a three mirror pool with all of its data distributed equally across all three mirrors – and that meant I’d need to do my migration in two stages, with two periods of user downtime.

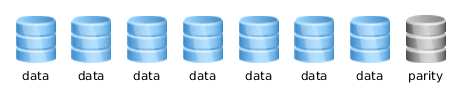

First, I broke mirror-0 and mirror-1.

I detached a single disk from each of my first two mirrors, then cleared its ZFS label afterward.

root@client-prod1:/# zpool detach wwn-0x50014ee20be7deb4-part3 ; zpool labelclear wwn-0x50014ee20be7deb4-part3

root@client-prod1:/# zpool detach wwn-0x50014ee2613cc470-part3 ; zpool labelclear wwn-0x50014ee2613cc470-part3

Now mirror-0 and mirror-1 are in DEGRADED condition, as is the pool – but it’s still up and running, and the users (who are busily working on storage and MySQL virtual machines hosted on the Sanoid Standard appliance we’re shelled into) are none the wiser.

Now we can create a temporary pool with the two freed disks.

We’ll also be sure to set compression on by default for all datasets created on or replicated onto our new pool – something I truly wish was the default setting for OpenZFS, since for almost all possible cases, LZ4 compression is a big win.

root@client-prod1:/# zpool create -o ashift=12 tmppool mirror wwn-0x50014ee20be7deb4-part3 wwn-0x50014ee2613cc470-part3

root@client-prod1:/# zfs set compression=lz4 tmppool

We haven’t really done much yet, but it felt like a milestone – we can actually start moving data now!

Next, we use Syncoid to replicate our VMs onto the new pool.

At this point, these are still running VMs – so our users won’t see any downtime yet. After doing an initial replication with them up and running, we’ll shut them down and do a “touch-up” – but this way, we get the bulk of the work done with all systems up and running, keeping our users happy.

root@client-prod1:/# syncoid -r data/images tmppool/images ; syncoid -r data/backup tmppool/backup

This took a while, but I was very happy with the performance – never dipped below 140MB/sec for the entire replication run. Which also strongly implies that my users weren’t seeing a noticeable amount of slowdown! This initial replication completed in a bit over an hour.

Now, I was ready for my first little “blip” of actual downtime.

First, I shut down all the VMs running on the machine:

root@client-prod1:/# virsh shutdown suite100 ; virsh shutdown suite100-mysql ; virsh shutdown suite100-openvpn

root@client-prod1:/# watch -n 1 virsh list

As soon as virsh list showed me that the last of my three VMs were down, I ctrl-C’ed out of my watch command and replicated again, to make absolutely certain that no user data would be lost.

root@client-prod1:/# syncoid -r data/images tmppool/images ; syncoid -r data/backup tmppool/backup

This time, my replication was done in less than ten seconds.

Doing replication in two steps like this is a huge win for uptime, and a huge win for the users – while our initial replication needed a little more than an hour, the “touch-up” only had to copy as much data as the users could store in a few moments, so it was done in a flash.

Next, it’s time to rename the pools.

Our system expects to find the storage for its VMs in /data/images/VMname, so for minimum downtime and reconfiguration, we’ll just export and re-import our pools so that it finds what it’s looking for.

root@client-prod1:/# zpool export data ; zpool import data olddata

root@client-prod1:/# zfs set mountpoint=/olddata/images/qemu olddata/images/qemu ; zpool export olddata

Wait, what was that extra step with the mountpoint?

Sanoid keeps the virtual machines’ hardware definitions on the zpool rather than on the root filesystem – so we want to make sure our old pool’s ‘qemu’ dataset doesn’t try to automount itself back to its original mountpoint, /etc/libvirt/qemu.

root@client-prod1:/# zpool export tmppool ; zpool import tmppool data

root@client-prod1:/# zfs set mountpoint=/etc/libvirt/qemu data/images/qemu

OK, at this point our original, degraded zpool still exists, intact, as an exported pool named olddata; and our temporary two disk pool exists as an active pool named data, ready to go.

After less than one minute of downtime, it’s time to fire up the VMs again.

root@client-prod1:/# virsh start suite100 ; virsh start suite100-mysql ; virsh start suite100-openvpn

If anybody took a potty break or got up for a fresh cup of coffee, they probably missed our first downtime window entirely. Not bad!

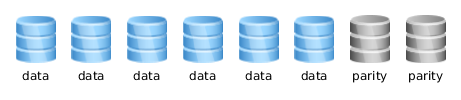

Time to destroy the old pool, and re-use its remaining disks.

After a couple of checks to make absolutely sure everything was working – not that it shouldn’t have been, but I’m definitely of the “measure twice, cut once” school, especially when the equipment is a few hundred miles away – we’re ready for the first completely irreversible step in our eight-disk fandango: destroying our original pool, so that we can create our final one.

root@client-prod1:/# zpool destroy olddata

root@client-prod1:/# zpool create -o ashift=12 newdata mirror wwn-0x50014ee20b8b7ba0-part3 wwn-0x50014ee261102579-part3

root@client-prod1:/# zpool add -o ashift=12 newdata mirror wwn-0x50014ee2613cfdf8-part3 wwn-0x50014ee2b66693b9-part3

root@client-prod1:/# zpool add -o ashift=12 newdata mirror wwn-0x50014ee20b9b4e0d-part3 wwn-0x50014ee2610ffa17-part3

root@client-prod1:/# zfs set compression=lz4 newdata

Perfect! Our new, final pool with three mirrors is up, LZ4 compression is enabled, and it’s ready to go.

Now we do an initial Syncoid replication to the final, six-disk pool:

root@client-prod1:/# syncoid -r data/images newdata/images ; syncoid -r data/backup newdata/backup

About an hour later, it’s time to shut the VMs down for Brief Downtime Window #2.

root@client-prod1:/# virsh shutdown suite100 ; virsh shutdown suite100-mysql ; virsh shutdown suite100-openvpn

root@client-prod1:/# watch -n 1 virsh list

Once our three VMs are down, we ctrl-C out of ‘watch’ again, and…

Time for our final “touch-up” re-replication:

root@client-prod1:/# syncoid -r data/images newdata/images ; syncoid -r data/backup newdata/backup

At this point, all the actual data is where it should be, in the right datasets, on the right pool.

We fix our mountpoints, shuffle the pool names, and fire up our VMs again:

root@client-prod1:/# zpool export data ; zpool import data tmppool

root@client-prod1:/# zfs set mountpoint=/tmppool/images/qemu olddata/images/qemu ; zpool export tmppool

root@client-prod1:/# zpool export newdata ; zpool import newdata data

root@client-prod1:/# zfs set mountpoint=/etc/libvirt/qemu data/images/qemu

root@client-prod1:/# virsh start suite100 ; virsh start suite100-mysql ; virsh start suite100-openvpn

Boom! Another downtime window over with in less than a minute.

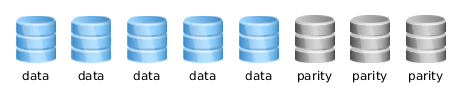

Our TOTAL elapsed downtime was less than two minutes.

At this point, our users are up and running on the final three-mirror pool, and we won’t be inconveniencing them again today. Again we do some testing to make absolutely certain everything’s fine, and of course it is.

The very last step: destroying tmppool.

root@client-prod1:/# zpool destroy tmppool

That’s it; we’re done for the day.

We’re now up and running on only six total disks, not eight, which gives us the room we need to physically remove two disks. With those two disks gone, we’ve got room to slap in a pair of SSDs for a second pool with a solid-state mirror vdev when we’re (well, I’m) there in person, in a week or so. That will also take a minute or less of actual downtime – and in that case, the preliminary replication will go ridiculously fast too, since we’ll only be moving the MySQL VM (less than 20G of data), and we’ll be writing at solid state device speeds (upwards of 400MB/sec, for the Samsung Pro 850 series I’ll be using).

None of this was exactly rocket science. So why am I sharing it?

Well, it’s pretty scary going in to deliberately degrade a production system, so I wanted to lay out a roadmap for anybody else considering it. And I definitely wanted to share the actual time taken for the various steps – I knew my downtime windows would be very short, but honestly I’d been a little unsure how the initial replication would go, given that I was deliberately breaking mirrors and degrading arrays. But it went great! 140MB/sec sustained throughput makes even pretty substantial tasks go by pretty quickly – and aside from the two intervals with a combined downtime of less than two minutes, my users never even noticed anything happening.

Closing with a plug: yes, you can afford it.

If this kind of converged infrastructure (storage and virtualization) management sounds great to you – high performance, rapid onsite and offsite replication, nearly zero user downtime, and a whole lot more – let me add another bullet point: low cost. Getting started isn’t prohibitively expensive.

Sanoid appliances like the ones we’re describing here – including all the operating systems, hardware, and software needed to run your VMs and manage their storage and automatically replicate them both on and offsite – start at less than $5,000. For more information, send us an email, or call us at (803) 250-1577.

Finally found the time to set up my little fanless Celeron 1037u router project today. So far, it’s very promising!

Finally found the time to set up my little fanless Celeron 1037u router project today. So far, it’s very promising!