While benchmarking the Ars Technica Hot Rod server build tonight, I decided to empirically demonstrate the effects of zfs set sync=disabled on a dataset.

In technical terms, sync=disabled tells ZFS “when an application requests that you sync() before returning, lie to it.” If you don’t have applications explicitly calling sync(), this doesn’t result in any difference at all. If you do, it tremendously increases write performance… but, remember, it does so by lying to applications that specifically request that a set of data be safely committed to disk before they do anything else. TL;DR: don’t do this unless you’re absolutely sure you don’t give a crap about your applications’ data consistency safeguards!

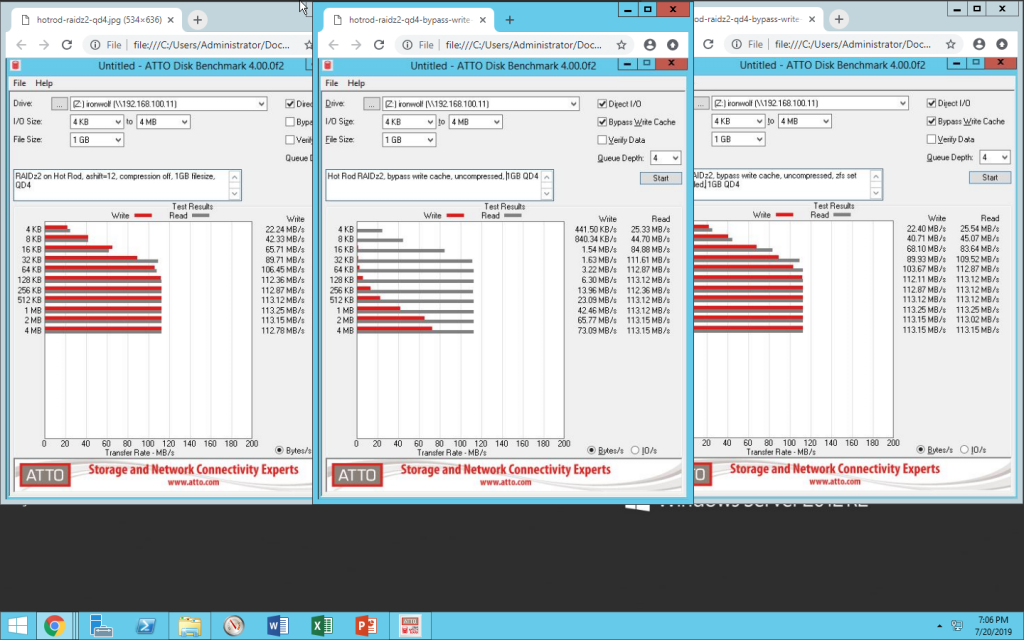

In the below screenshot, we see ATTO Disk Benchmark run across a gigabit LAN to a Samba share on a RAIDz2 pool of eight Seagate Ironwolf 12TB disks. On the left: write cache is enabled (meaning, no sync() calls). In the center: write cache is disabled (meaning, a sync() call after each block written). On the right: write cache is disabled, but zfs set sync=disabled has been set on the underlying dataset.

The effect is clear and obvious: zfs set sync=disabled lies to applications that request sync() calls, resulting in the exact same performance as if they’d never called sync() at all.

Now you need to do the same test with and without and fast NVMe zil cache. The ZIL is a cache designed to how these write requests from quickly when requested while the write continues to happen in the background.

You should find that the slowdown from leaving sync enabled is greatly reduced.

It would be interesting to see the same benchmark with a ZIL device, to see if it can be as good (or better) than sync=disabled. ATTO may not be the best tool for it, as it is a pretty short benchmark.