ZFS stores data in records—also known as, and interchangeably referred to by OpenZFS devs as blocks—which are themselves composed of on-disk sectors. The physical sector size on disk is set by the ashift value at time of vdev creation, and is immutable. The recordsize, on the other hand, is individual to each dataset(although it can be inherited from parent datasets), and can be changed at any time you like. In 2019, recordsize defaults to 128K if not explicitly set.

The general rule of recordsize is that it should closely match the typical workload experienced within that dataset. For example, a dataset used to store high-quality JPGs, averaging 5MB or more, should have recordsize=1M. This matches the typical I/O seen in that dataset – either reading or writing a full 5+ MB JPG, with no random access within each file – quite well; setting that larger recordsize prevents the files from becoming unduly fragmented, ensuring the fewest IOPS are consumed during either read or write of the data within that dataset.

By contrast, a dataset which directly contains a MySQL InnoDB database should have recordsize=16K. That’s because InnoDB defaults to a 16KB page size, so most operations on an InnoDB database will be done in individual 16K chunks of data. Matching recordsize to MySQL’s page size here means we maximize the available IOPS, while minimizing latency on the highly sync()hronous reads and writes made by the database (since we don’t need to read or write extraneous data while handling our MySQL pages).

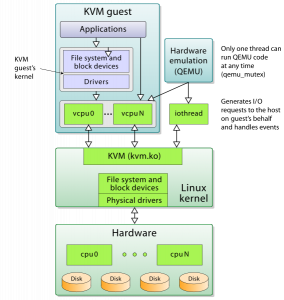

(That’s cluster_size, for QEMU/KVM .qcow2.)

On the other hand, if you’ve got a MySQL InnoDB database stored within a VM, your optimal recordsize won’t necessarily be either of the above – for example, KVM .qcow2 files default to a cluster_size of 64KB. If you’ve set up a VM on .qcow2 with default cluster_size, you don’t want to set recordsize any lower (or higher!) than the cluster_size of the .qcow2 file. So in this case, you’ll want recordsize=64K to match the .qcow2’s cluster_size=64K, even though the InnoDB database inside the VM is probably using smaller pages.

An advanced administrator might look at all of this, determine that a VM’s primary function in life is to run MySQL, that MySQL’s default page size is good, and therefore set both the .qcow2 cluster_size and the dataset’s recordsize to match, at 16K each.

A different administrator might look at all this, determine that the performance of MySQL in the VM with all the relevant settings left to their defaults was perfectly fine, and elect not to hand-tune all this crap at all. And that’s okay.

What if I set recordsize too high?

If recordsize is much higher than the size of the typical storage operation within the dataset, latency will be greatly increased and this is likely to be incredibly frustrating. IOPS will be very limited, databases will perform poorly, desktop UI will be glacial, etc.

What if I set recordsize too low?

If recordsize is a lot smaller than the size of the typical storage operation within the dataset, fragmentation will be greatly (and unnecessarily) increased, leading to unnecessary performance problems down the road. IOPS as measured by artificial tools will be super high, but performance profiles will be limited to those presented by random I/O at the record size you’ve set, which in turn can be significantly worse than the performance profile of larger block operations.

You’ll also screw up compression with an unnecessarily low recordsize; zfs inline compression dictionaries are per-record, and work by fitting the contents of a single logical record (aka block) into fewer on-disk sectors than it would have needed if uncompressed.

If you set compression=lz4, ashift=12, and recordsize=4K you’ll effectively have NO compression, because your sector size is equal to your recordsize—and you can’t store data in “half a sector”, so compressing a one-sector record down to 0.5 sectors’ worth of data would not result in less usage of on-disk capacity.

Meanwhile, the same dataset with the default 128K recordsize might easily have a 1.7:1 compression ratio.

Are the defaults good? Do I aim high, or do I aim low?

128K is a pretty reasonable “ah, what the heck, it works well enough” setting in general. It penalizes you significantly on IOPS and latency for small random I/O operations, and it presents more fragmentation than necessary for large contiguous files, but it’s not horrible at either task. There is a lot to be gained from tuning recordsize more appropriately for task, though.

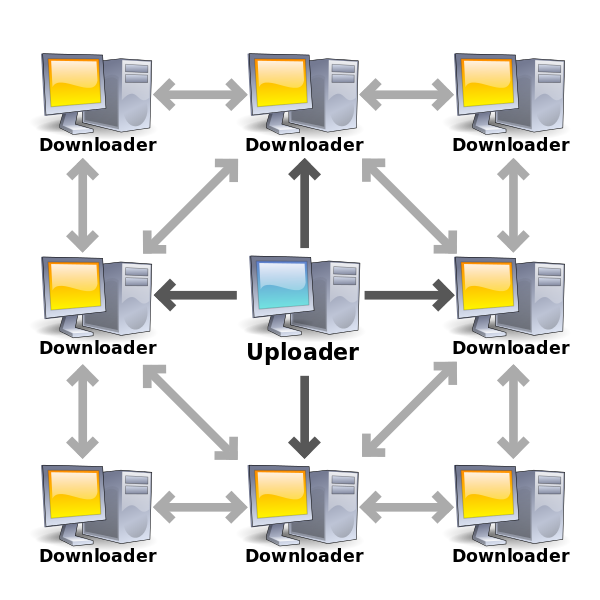

What about bittorrent?

This is one of those cases where things work just the opposite of how you might think – torrents write data in relatively small chunks, and access them randomly for both read and write, so you might reasonably think this calls for a small recordsize. However, the actual data in the torrents is typically huge files, which are accessed in their entirety for everything but the initial bittorrent session.

Since the typical access pattern is “large-file”, most people will be better off using recordsize=1M in the torrent target storage. This keeps the downloaded data unfragmented despite the bittorrent client’s insanely random writing patterns. The data acquired during the bittorrent session in chunks is accumulated in the ZIL until a full record is available to write, since the torrent client itself is not synchronous – it writes all the time, but rarely if ever calls sync().

As a proof-of-concept, I used the Transmission client on an Ubuntu 16.04 LTS workstation to download the Ubuntu 18.04.2 Server LTS ISO, with a dataset using recordsize=1M as the target. This workstation has a pool consisting of two mirror vdevs on rust, so high levels of fragmentation would be very easy to spot.

root@locutus:/# zpool export data ; modprobe -r zfs ; modprobe zfs ; zpool import data

root@locutus:/# pv < /data/torrent/ubu*18*iso > /dev/null

883MB 0:00:03 [ 233MB/s] [==================================>] 100%Exporting the pool and unloading the ZFS kernel module entirely is a weapons-grade-certain method of emptying the ARC entirely; getting better than 200 MB/sec average read throughput directly from the rust vdevs afterward (the transfer actually peaked at nearly 400 MB/sec!) confirms that our torrented ISO is not fragmented.

Note that preallocation settings in your bittorrent client are meaningless when the client is saving to ZFS – you can’t actually preallocate in any meaningful way on ZFS, because it’s a copy-on-write filesystem.